作者:容易 日期:2015-03-17

系统环境

硬件服务器配置

CPU:Intel(R) Xeon(R) CPU E7-4870 v2 @ 2.30GHz×4 总计60核

内存:512G

硬盘:采用fio卡,文件系统采用ext4文件系统

备注:通过bioss开启电源的最大性能和CPU最大性能模式。

如果BIOSS无法设置CPU最大性能模式可以通过系统级别设置

Cpu:设置CPU工作模式为最大性能模式

可使用如下脚本

for CPUFREQ in /sys/devices/system/cpu/cpu*/cpufreq/scaling_governor; do [ -f $CPUFREQ ] || continue; echo -n performance > $CPUFREQ; done

网卡软中断设置:大并发服务器的网卡软中断可能会固定在某核CPU上,可能会因为单核CPU无法满足网络软中断的处理请求,成为性能瓶颈,建议将网卡中断绑定在不同的CPU上,另外可以根据实际情况采用多网卡处理不同的业务流量。

网卡软中断绑定方式

网卡中断号多CPU绑定,需要网卡支持多队列模式,如果以下命令的结果就1行记录,说明网卡只支持单队列模式,无法进行多CPU绑定,如果是多行的话即可使用网卡绑定。

首先关闭irqbalance

[root@hpdl580-176 ~]# /etc/init.d/irqbalance stop

获取网卡中断号,第一行忽略,p1p1代表网卡接口名有些通常服务器是eth0或者em0之类的,根据实际情况调整。

cat /proc/interrupts |grep p1p1|awk ‘{print $1}’

115

116

117

118

绑定软中断到指定的CPU,这里的0 1代表第一核和第二核

echo 0 > /proc/irq/116/smp_affinity_list

echo 0 > /proc/irq/117/smp_affinity_list

echo 0 > /proc/irq/118/smp_affinity_list

echo 1 > /proc/irq/119/smp_affinity_list

#fio卡这段大家可以忽略,本次测试确保数据全部在内存里面,忽略IO的影响。

[root@hpdl580-176 ~]# fio-status -a

Found 2 ioMemory devices in this system

Driver version: 3.2.3 build 950

Adapter: ioMono

Fusion-io 1.65TB ioScale2, Product Number:F11-003-1T65-CS-0001, SN:1412G0967, FIO SN:1412G0967

ioDrive2 Adapter Controller, PN:PA005004003

PCIe Bus voltage: avg 12.12V

PCIe Bus current: avg 0.70A

PCIe Bus power: avg 8.40W

PCIe slot available power: unavailable

PCIe negotiated link: 4 lanes at 5.0 Gt/sec each, 2000.00 MBytes/sec total

Connected ioMemory modules:

fct0: Product Number:F11-003-1T65-CS-0001, SN:1412G0967

操作系统版本:

[root@hpdl580-176 ~]# cat /etc/redhat-release

CentOS release 6.6 (Final)

[root@hpdl580-176 ~]# uname -a

Linux hpdl580-176 2.6.32-504.el6.x86_64 #1 SMP Wed Oct 15 04:27:16 UTC 2014 x86_64 x86_64 x86_64 GNU/Linux

mysql数据库版本:

mysql> select version();

+------------+

| version() |

+------------+

| 5.6.20-log |

+------------+

1 row in set (0.00 sec)

系统内核参数设置,其他为默认设置

kernel.shmall = 4294967296

vm.zone_reclaim_mode = 0

kernel.core_uses_pid = 1

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

kernel.shmmni = 4096

vm.swappiness = 1

vm.nr_hugepages=208896

用户limit设置

* soft memlock -1

* hard memlock -1

* soft nproc 10240

* hard nproc 16384

* soft nofile 65536

* hard nofile 65536

mysql配置

[root@hpdl580-176 ~]# cat /etc/my.cnf

[client]

socket = /app/mysql/run/mysql.sock

user=root

[mysqld]

server_id = 2

user=mysql

port = 3306

bind-address = 0.0.0.0

max_connections = 3000

max_connect_errors = 30

back_log = 600

socket = /app/mysql/run/mysql.sock

pid-file = /app/mysql/run/mysql.pid

basedir = /app/mysql

datadir = /app/mysql/data

log-error = /app/mysql/log/mysql_err.log

log-warnings = 1

log-bin=/app/mysql/data/log-bin

expire_logs_days=5

max_binlog_size = 512M

binlog_format=mixed

long_query_time=0.03

skip_name_resolve=on

slow-query-log=1

slow-query-log-file = /app/mysql/log/mysql_slowquery.log

explicit_defaults_for_timestamp=true

open_files_limit = 10240

table_open_cache = 4096

max_allowed_packet = 32M

binlog_cache_size = 256M

max_heap_table_size = 128M

sort_buffer_size = 16M

join_buffer_size = 16M

read_buffer_size =1M

thread_cache_size = 220

query_cache_limit = 4M

ft_min_word_len = 8

character-set-server = utf8

thread_stack = 1024K

transaction_isolation = REPEATABLE-READ

tmp_table_size = 128M

sync_binlog=1

innodb_thread_concurrency=0

innodb_buffer_pool_size = 128G

innodb_buffer_pool_instances = 64

innodb_flush_method = O_DIRECT

innodb_sync_spin_loops=11

innodb_spin_wait_delay=12

innodb_page_size=8192

query_cache_type = 0

query_cache_size=0

innodb_data_home_dir = /app/mysql/data

innodb_data_file_path = ibdata1:128M:autoextend

innodb_write_io_threads = 12

innodb_read_io_threads = 24

innodb_flush_log_at_trx_commit = 1

innodb_log_buffer_size = 16M

innodb_log_file_size = 512M

innodb_log_files_in_group = 3

innodb_flush_method = O_DIRECT

innodb_log_group_home_dir = /app/mysql/data/

innodb_max_dirty_pages_pct = 77

innodb_lock_wait_timeout = 100

innodb_flush_neighbors = 0

innodb_io_capacity=4000

innodb_io_capacity_max=20000

relay-log = /app/mysql/data/relaylog

max_relay_log_size = 512M

relay_log_purge = 1

relay_log_recovery = 1

binlog_checksum = CRC32

slave_allow_batching = 1

master_verify_checksum = 1

slave_sql_verify_checksum = 1

binlog_rows_query_log_events = 1

master_info_repository = TABLE

relay_log_info_repository = TABLE

log_slave_updates

测试用例

简单模拟用户交易记录的分页查询,纯粹的查询极限测试,确保所有数据全部在内存里面保证cache命令率%100.

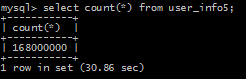

表数据量 1.68亿行

表的平均行长度等信息

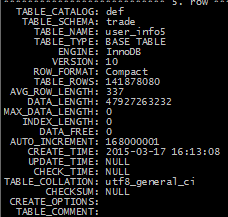

表结构和索引如下

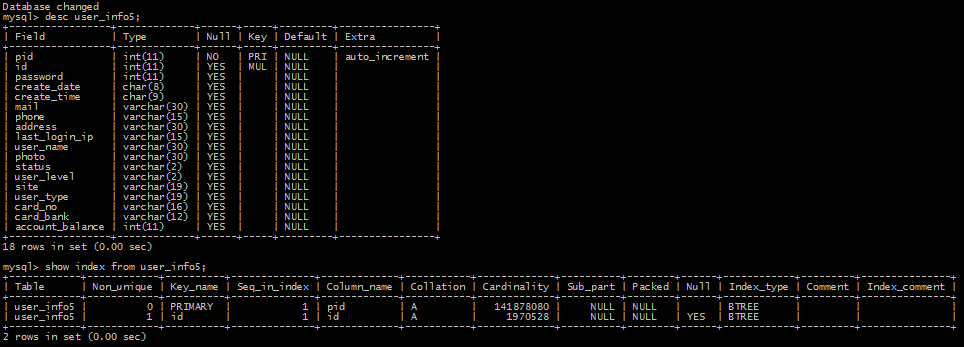

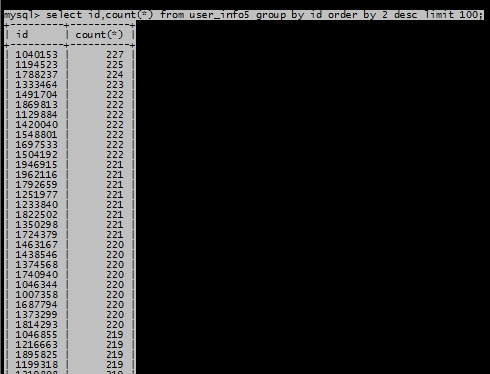

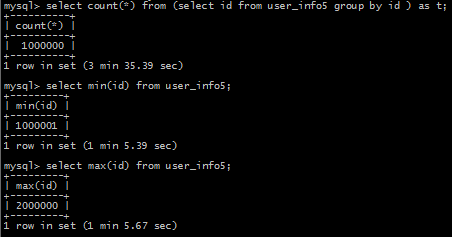

数据分布如下,ID范围 1000001~2000000总共有100W个ID值,平均每个ID涉及到的行数是114~227行,同ID的数据也分散在不同的数据块中。

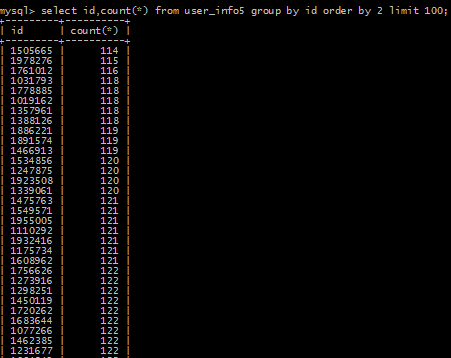

测试sql如下,其中%s是变量 id随机值1000001~2000000 limit%s 0~100, /*xx*/是根据并发的ID自动生成,由于之前oracle压力测试遇到过cursor: pin S的问题所以在Mysql测试的时候也加上了/*xx*/这个,是否有影响没有注意过。

select /*xx*/ pid,id,password,create_date,create_time,mail,phone,address,last_login_ip,user_name,photo from user_info5 where id=%s limit %s,10

压力源采多进程并发的,与mysql的数据库连接为长连接。

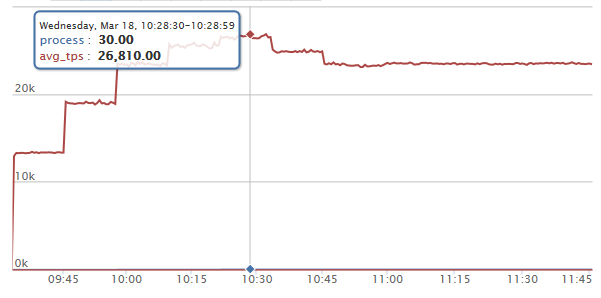

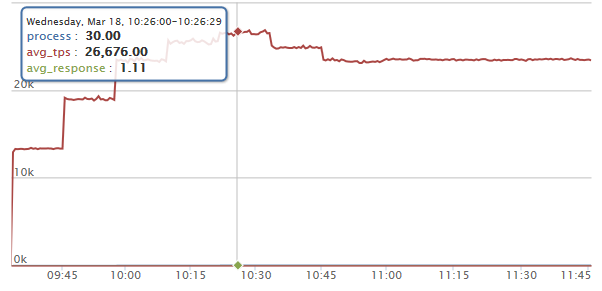

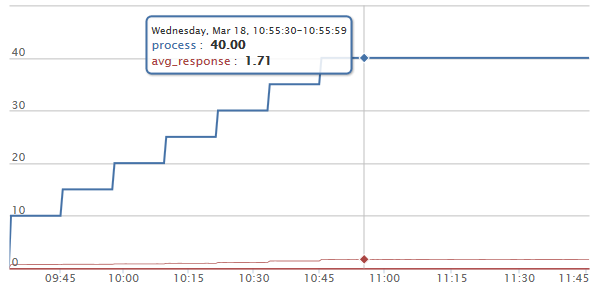

场景一、最大并发数为40进程,初始并发数为10个进程,每个12分钟增加5个并发,进程数达到40并发后,持续60分钟。

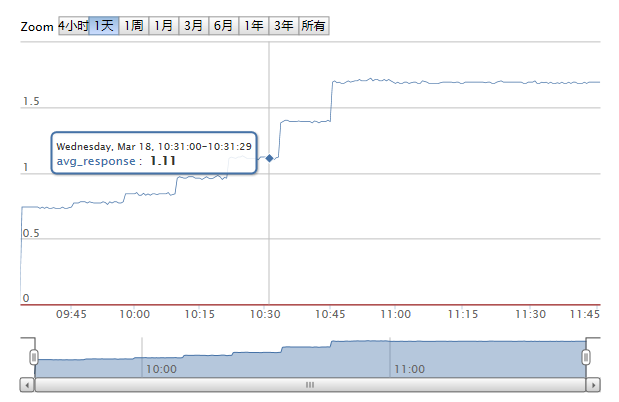

响应分布和平均响应时间如下,单位时间为毫秒

[root@mdb-166 news]# python mysql_run_time_load_status4.0.py user_info5_pro_10_40

fag file name is user_info5_pro_10_4018_093339.fag ,delete this file test will finish.

Job_Pid:74266 Process:40 Job_Start_Time:2015-03-18 09:33:39

Total_suc_fail:179560968,0;tps/s:23421;max_avg_min_rt(ms):33.51,1.32,0.31:running:40:needtime:10:99% ||..............

Task_complete_time:2015-03-18 11:45:40

Total Request:179815217 Complete Request:179815217 Fail Request:0 Succeed_pre:100.0% total_elapsed_time(s):238081.1

Min_Avg_Max_response_time(ms):0.31,1.32,33.51

Response Time(ms) Statistics Percentile

0.79: 26.35%

0.93: 37.86%

1.06: 47.49%

1.19: 55.40%

1.32: 61.98%

1.59: 73.12%

1.85: 81.05%

2.12: 86.78%

9.27: 100.00%

11.92: 100.00%

Run_Time(s):7920.37

Job_End_Time:2015-03-18 11:45:40

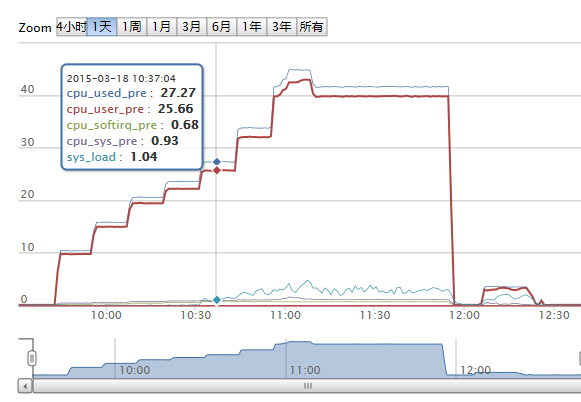

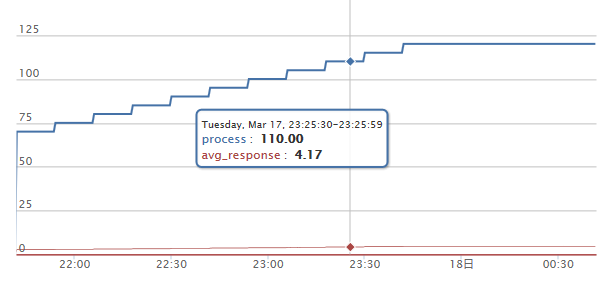

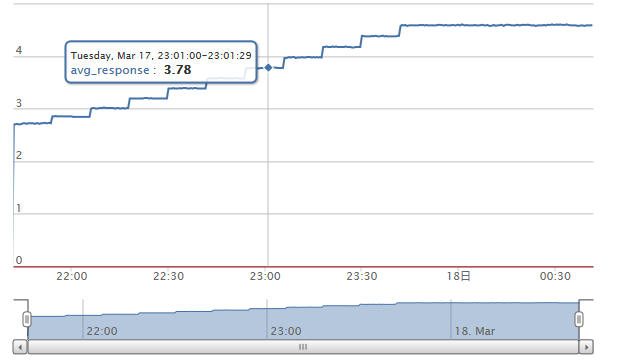

实时响应监控

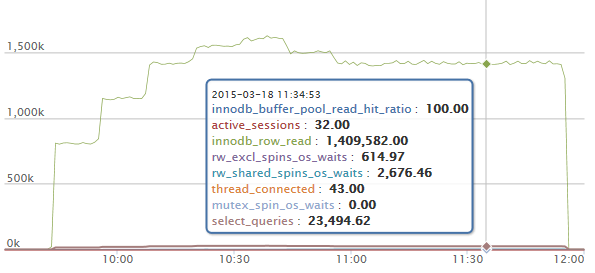

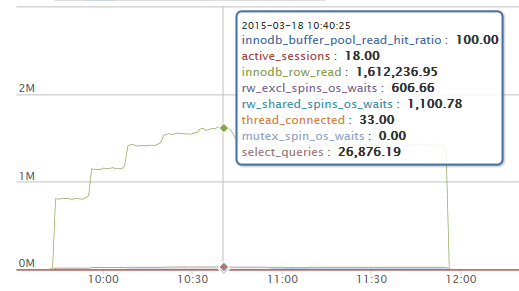

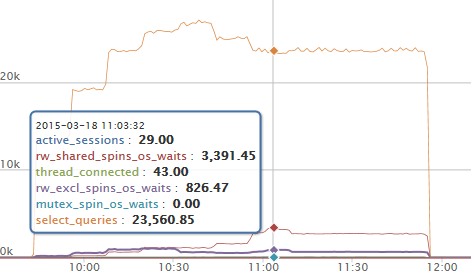

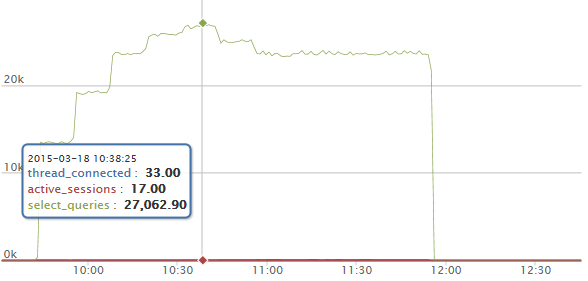

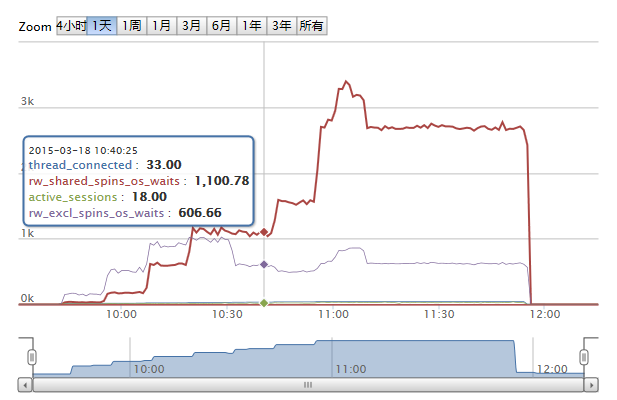

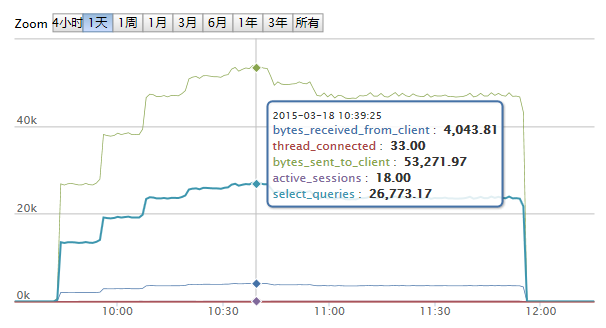

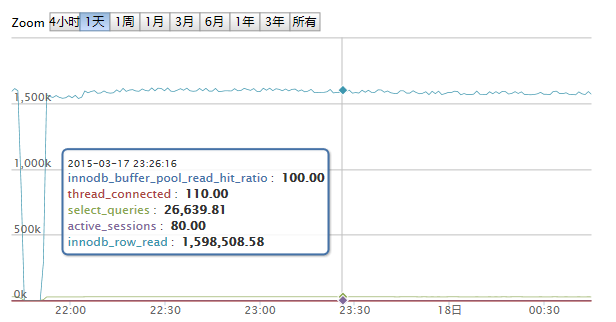

mysql实时监控信息如下

网络流量单位KB

服务器资源监控

测试结果分析

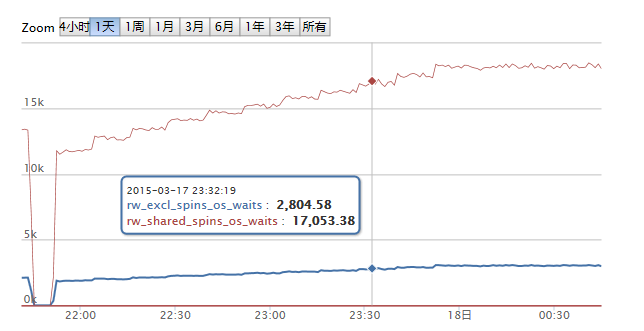

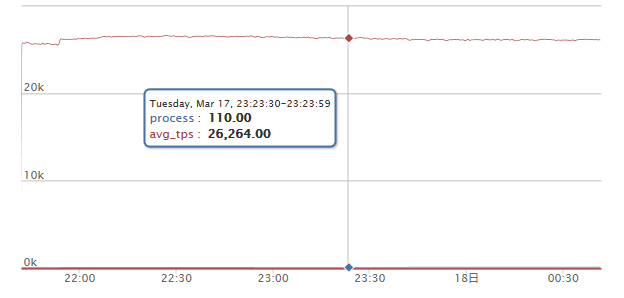

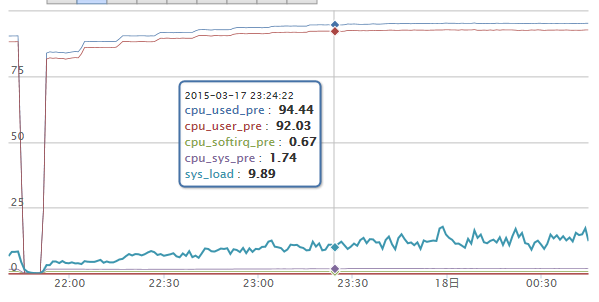

通过mysql的innodb_row_read与每秒的select_queries(QPS)计算得出每次查询平均需要读取大约60行的数据,并发数在30时,从每秒QPS以及系统资源利用率等性能指标达到最优,并发数超过30后,mysql的rw_shared_spins_os_waits和rw_excl_spins_os_waits等待增加,造成mysql服务器的cpu利用率上升和QPS的响应时间增加,每秒QPS也随之下降。

各位大神帮忙指点下如何去优化

mysql的rw_shared_spins_os_waits和rw_excl_spins_os_waits等待

因为此次测试是纯查询测试为何rw_excl_spins_os_waits等待也会比较高

以下是一个最大并发120进程,初始并发70进程,每12分钟增加5个进程,当达到120并发时持续60分钟的测试结果,与上面的测试除了并发数不同其他环境一致。

[root@mdb-166 news]# python mysql_run_time_load_status4.0.py user_info5_pro_120

fag file name is user_info5_pro_12017_214155.fag ,delete this file test will finish.

Job_Pid:54305 Process:120 Job_Start_Time:2015-03-17 21:41:55

Total_suc_fail:282983921,0;tps/s:26081;max_avg_min_rt(ms):182.77,3.86,0.36:running:120:needtime:2:99% ||...................................................

Task_complete_time:2015-03-18 00:41:56

Total Request:283067664 Complete Request:283067664 Fail Request:0 Succeed_pre:100.0% total_elapsed_time(s):1092674.7

Min_Avg_Max_response_time(ms):0.34,3.86,182.77

Response Time(ms) Statistics Percentile

2.32: 30.32%

2.70: 38.99%

3.09: 47.44%

3.47: 54.84%

3.86: 62.09%

4.63: 72.52%

5.40: 79.85%

6.18: 85.35%

27.02: 99.99%

34.74: 100.00%

Run_Time(s):10801.10

Job_End_Time:2015-03-18 00:41:56

各种性能指标监控如下

从测试结果看与之前的测试结果基本一致

随着并发数的增加,mysql的rw_shared_spins_os_waits和rw_excl_spins_os_waits等待增加,造成mysql服务器的cpu利用率上升和QPS的响应时间增加,每秒QPS也随之下降。

One Response